Integration tests check whether different parts of your system work together correctly. Most backend integration tests depend on a real database, and as your schema and test suite grow, these tests become slower and the feedback loop suffers.

Across teams and projects, I’ve seen this pattern repeat. So I set out to fix it. This is the story of what I built, why I built it, and how it works under the hood.

Why integration tests get slow

Integration tests start out fast, but as an API grows the database layer becomes the bottleneck.

Mocking is not enough

There is a moment in every backend project where your team argues about whether tests should hit the database at all.

Mocking the database sounds ideal at first. It is fast, simple and predictable. If a test is failing, it is almost always your own code, not the database. But after months of real development the cracks start to show.

-

Your mocks do not enforce real relational constraints.

They will let you create data that a real database would reject, for example invalid foreign keys or missing required relations.

-

Your mocks do not enforce unique or index backed rules.

Real databases will block duplicate values, enforce unique indexes and apply constraints during writes. Mocks accept everything unless you manually implement the same checks.

-

Your mocks do not reproduce real database errors.

A real database throws errors for things like invalid UUIDs, invalid enum values, foreign key violations, not null violations or invalid JSON. Mocks only throw what you manually program into them.

-

Your mocks do not behave like a real database when paginating.

Offset and cursor based pagination both rely on real ordering rules, index selection, collation and type comparison semantics. Mocks simply slice JavaScript arrays, so pagination results do not match real database behavior.

-

Your mocks do not match real world behavior at scale.

Large datasets, index backed queries, joins and sorting degrade and behave differently in a real database. Mocks always behave as if the dataset is tiny, predictable and perfectly sorted.

And worst of all, when you mock the database, you lose the entire class of bugs that come from misaligned schema changes, migrations, seed assumptions or data shape mismatches.

For these reasons, I prefer integration tests with a real database instance.

A single, realistic seed baseline

Before talking about performance, it is important to talk about different testing strategies.

If your system is multi tenant or has complex relational structures, building all required entities inside each test quickly becomes repetitive. You end up duplicating logic across tests, creating slightly different versions of the same objects, and drifting away from what the real application data actually looks like.

A realistic baseline seed is a predefined set of records that represents a stable and realistic starting state for your system. It acts as a single authoritative seed that every test begins with. The seed can be small or large, depending on what your tests need. Some suites only require a handful of entities. Others rely on hundreds of records to properly exercise pagination, filtering, or performance-sensitive features.

The benefit is consistency. Tests no longer drift apart, your test data mirrors real application behavior, and when your domain model evolves, you update the seed once and every test benefits from the change.

This is why I consider a realistic baseline seed the preferred model for database backed integration tests.

Performance Challenges

Once you accept that real integration tests are valuable, you run into the next problem: performance.

The downside of using a realistic baseline seed is the cost of preparing it. Most teams reset the database before each test and then run the full seed script again. This gives good isolation, but at scale it becomes extremely slow.

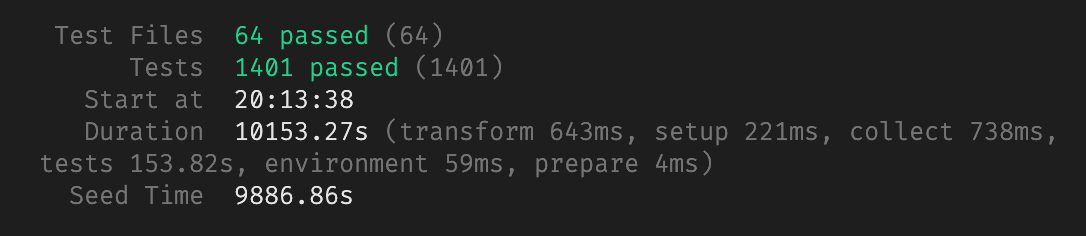

In my case, 1401 tests in 64 suites were each running a full reseed. With seeding at ~7 seconds and each test at ~100ms, the full run ended up at 10,153.27 seconds, nearly 3 hours:

This is not what integration tests should feel like.

Missing JavaScript Tooling

Wrapping each test case in a database transaction and rolling it back afterwards is a proven pattern. Frameworks like Django, Laravel, Rails, and Spring have used it for decades because it gives you realistic behavior, full isolation and extremely fast test runs.

But somehow this pattern never became mainstream in the fragmented JavaScript ecosystem. There are many ORMs, many test runners and many relational databases in use, so patterns that might be obvious in other stacks do not always translate cleanly into JavaScript.

A few community projects tried to fill this gap:

@quramy/jest-prismabrought transaction-based testing to Prisma inside Jest. It worked well, but it is no longer actively maintained.vitest-environment-vprismaused a part of the former in a Vitest environment, but also became unmaintained.

The idea is solid, the available tooling is not.

Making database integration tests fast

Once you look past ecosystem limitations, the pattern for fast and isolated integration tests is simple: seed your database once, run every test inside a transaction and roll it back afterwards. Each test sees the same baseline state, nothing leaks into the next one and you never need to reseed between tests.

This approach gives you real database behavior with near unit test performance. There is no cleanup code, no repeated inserts and no fluctuation between tests, only deterministic results at high speed.

In my own work I use Prisma a lot, Postgres is my go to relational database and Vitest has become my preferred test runner. Before I built something dedicated for this pattern, I relied on a mix of unmaintained libraries held together with patch-package (later pnpm patch) and duct tape.

After wrestling with that setup for long enough, I decided to stop fighting my tools and built the missing piece: vitest-environment-prisma-postgres.

My library automating this pattern

Vitest lets you plug in a custom test environment, a small module that runs before your tests and controls how they execute. I built one of these environments to apply the transaction-based testing pattern in a predictable and ergonomic way.

It does not run migrations, seed your database or start Postgres for you. It focuses on one job: Wrap every individual test case in a Postgres transaction and roll it back afterwards.

You can find the code on GitHub:

https://github.com/codepunkt/vitest-environment-prisma-postgres

The core mechanism

Instead of listing every internal detail up front, let’s have a look at the core mechanism first:

- The library exposes a Prisma client on a global that’s used to mock your API’s Prisma client in tests

- It starts a transaction before each test and rolls it back afterwards

- Nested transactions are suppported through Postgres savepoints, so it doesn’t matter if your business logic uses transactions or not.

This is all you need for fast and isolated integration tests. No per test setup, no cleanup code and no reseeding.

Improved Performance

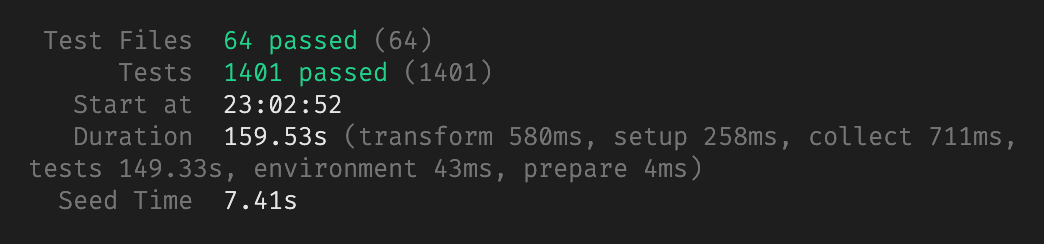

Before using this environment, my full test run took a bit under 3 hours. With transaction wrapped tests and a single initial seed, the same 1401 tests in 64 suites now completed in 159.53 seconds, which is a bit under 3 minutes.

That is roughly a 98% speed improvement.

Setting up your test environment

After installing my library and ensuring that the required peer dependencies of vitest, prisma and @prisma/adapter-pg are available in your project, you enable the environment in your vitest.config.ts:

import { defineConfig } from 'vitest/config';

export default defineConfig({

test: {

environment: 'prisma-postgres',

environmentOptions: {

'prisma-postgres': {

clientPath: './generated/prisma-client',

},

},

setupFiles: [

'vitest-environment-prisma-postgres/setup',

'./vitest.setup.ts',

],

},

});Next, you mock your local Prisma client with the client provided by the new environment in vitest.setup.ts:

import { vi } from 'vitest';

vi.mock('./generated/prisma-client', () => ({

default: prismaPostgresTestContext.client,

}));Now all of your application code under test uses the transactional Prisma client.

Library Internals

Let’s look at the internals of vitest-environment-prisma-postgres next, because there are a few important concepts hiding underneath. If you don’t care about the technical deep dive, you can skip this chapter and jump straight to setting up your test database.

Transactions & Connections

In Postgres, a transaction belongs to a single connection. If you want each test case to run inside its own database transaction, every query inside that test must use the same connection.

Prisma has the same requirement. Its interactive transactions are started by prisma.$transaction, which you can pass an async function to. The first argument passed into this async function is the so-called transaction client, which we will refer to as tx. It is similar to a Prisma client but exposes only model methods and raw query methods, and intentionally omits $transaction, $connect, and $disconnect, because you cannot start a transaction inside another transaction or change connections mid-transaction.

Using interactive transactions

The environment uses this fact to its advantage. Instead of manually managing a dedicated connection, it wraps each test in a Prisma interactive transaction. Prisma then guarantees that all queries executed through tx use the same connection.

The code that you want to test uses your normal Prisma client. To make it participate in the test-level transaction, the Prisma client is mocked by prismaPostgresTestContext.client in vitest.setup.ts. This forwards all operations to the current tests’s tx.

You might assume that prismaPostgresTestContext.client could just be the transaction client. In practice, that breaks down quickly. The raw tx object cannot turn nested $transaction calls into savepoints, cannot override $connect or $disconnect, and only lives for the duration of one test.

Since tx is both short-lived and inflexible, the environment needs something more capable and more persistent than the transaction client itself.

Introducing a Proxy

The cleanest way to support the required use cases is to introduce a proxy in front of the transaction client. This Proxy, which exists for the entire test suite, is where Prisma operations can be intercepted to rewrite $transaction calls into savepoint queries, neutralize $connect and $disconnect, and add further safeguards.

Because tx only exists inside the callback of the outer interactive transaction, the environment assigns the active transaction client to the Proxy when the test-level transaction begins and clears it once the test-level transaction ends. If code touches the mocked Prisma client outside an active transaction, the Proxy can detect this immediately and fail.

In practice, this makes the Proxy the simplest and most robust way to present a stable, Prisma-like interface while routing all operations through the correct transactional context under the hood.

Savepoints for nested transactions

Inside a test, all queries run within the outer interactive transaction created by the environment. This means that calling $transaction inside the code that’s being tested would normally attempt to start a new transaction on the same connection, which Postgres does not allow.

To keep the outer test-level transaction intact, the Proxy rewrites any $transaction call into a pair of Postgres savepoint operations:

SAVEPOINT sp_n- run the callback on the same

txclient RELEASE SAVEPOINT sp_non successROLLBACK TO SAVEPOINT sp_non error

This transforms $transaction into a lightweight, safe form of scoped work inside the test-level transaction without ever starting a nested transaction.

The important part is that all of this happens transparently: your application code continues to use $transaction as usual, and the environment simply ensures that these calls behave safely within the test-level transaction.

Setting up your test database

To get the most out of vitest-environment-prisma-postgres, you need two more things:

- A real database to run your tests against

- A way to create reliable test data at the beginning of a test run.

Here is how I set up both:.

Using a Postgres Testcontainer

Vitest lets you define one or more globalSetup files that run setup code before your entire test suite starts and teardown code after it finishes. This is the perfect place to spin up external services like Postgres.

import { defineConfig } from 'vitest/config';

export default defineConfig({

test: {

// further test configuration (see above)

globalSetup: './vitest.globalSetup.ts',

},

});With Testcontainers you can spin up real Docker containers directly from your code. In this case it starts a real Postgres instance. No local installation, no manual setup. If you have a container runtime configured, you get an isolated, reproducible database every time your test suite starts.

To run shell commands during setup, I use zx. It lets you run commands through the $ template literal with proper interpolation. In the setup below, zx handles running Prisma migrations and seed scripts against the Postgres container that Testcontainers just launched.

Putting both together in globalSetup gives you a clean workflow. You start a Postgres container in the setup method, set DATABASE_URL, and run your migrations and seed logic. Postgres is stopped cleanly after all tests finish using the teardown method. This setup behaves the same locally and in CI and guarantees that every run starts from the same state.

Here’s a minimal vitest.globalSetup.ts example:

import { $ } from 'zx';

import {

PostgreSqlContainer

type StartedPostgreSqlContainer

} from '@testcontainers/postgresql';

let container: StartedPostgreSqlContainer;

export async function setup() {

container = await new PostgreSqlContainer(

'postgres:13.3-alpine',

).start();

process.env.DATABASE_URL = container.getConnectionUri();

$.verbose = true; // optional, useful for debugging

const env = `DATABASE_URL=${process.env.DATABASE_URL}`

await $`${env} npx prisma migrate deploy`;

await $`${env} npx prisma db seed`;

}

export async function teardown() {

await container.stop();

}Seeding with prisma-fabbrica

For seeding, I like to reuse the same factories that I use in tests. That is what prisma-fabbrica is great at: it generates strongly typed factories from your Prisma schema so you can create realistic data with proper relations in just a few lines of code.

In order to generate those typed factories, you configure it in your schema.prisma as a generator:

generator fabbrica {

provider = "prisma-fabbrica"

output = "./src/generated/prisma-fabbrica"

}Prisma has a built-in seeding hook, and you tell Prisma how to run your seed mechanism in your package.json:

{

"prisma": {

"seed": "tsx ./src/seed.ts"

}

}Using tsx lets you write your seed script directly in TypeScript without any build step.

When you run prisma db seed, Prisma automatically sets DATABASE_URL and calls this script. In the Testcontainer setup above, this is exactly what happens inside globalSetup, after the Postgres instance has been migrated.

A minimal seed.ts using @quramy/prisma-fabbrica might look like this:

import { PrismaClient } from '@prisma/client';

import {

initialize,

defineUserFactory,

defineOrganizationFactory,

} from './generated/prisma-fabbrica';

const prisma = new PrismaClient();

initialize({ prisma });

async function seed() {

const UserFactory = defineUserFactory();

const OrganizationFactory = defineOrganizationFactory();

const admin = await UserFactory.create({

email: 'admin@example.com',

name: 'Admin User',

});

await OrganizationFactory.create({

name: 'Demo Organization',

owner: { connect: { id: admin.id } },

});

}

await seed();

await prisma.$disconnect();

console.log('Database seed successful.\n');Summary

Integration tests surface issues that unit tests miss, reflect real system behavior, and provide the highest confidence. They’re also the first tests to slow down as your system grows. By seeding data upfront, and wrapping every test in a Postgres transaction, you can combine realism, isolation and speed.

My library, vitest-environment-prisma-postgres, is predictable, simple and fast, and automates a technique that has worked for decades in other ecosystems. And it took my own test suite from a bit under three hours to a bit under three minutes.

If you want to test your API against a real database without sacrificing speed, give this setup a try. Spread the word, share this article and the library with fellow JavaScript people, and let me know what roadblocks you run into!